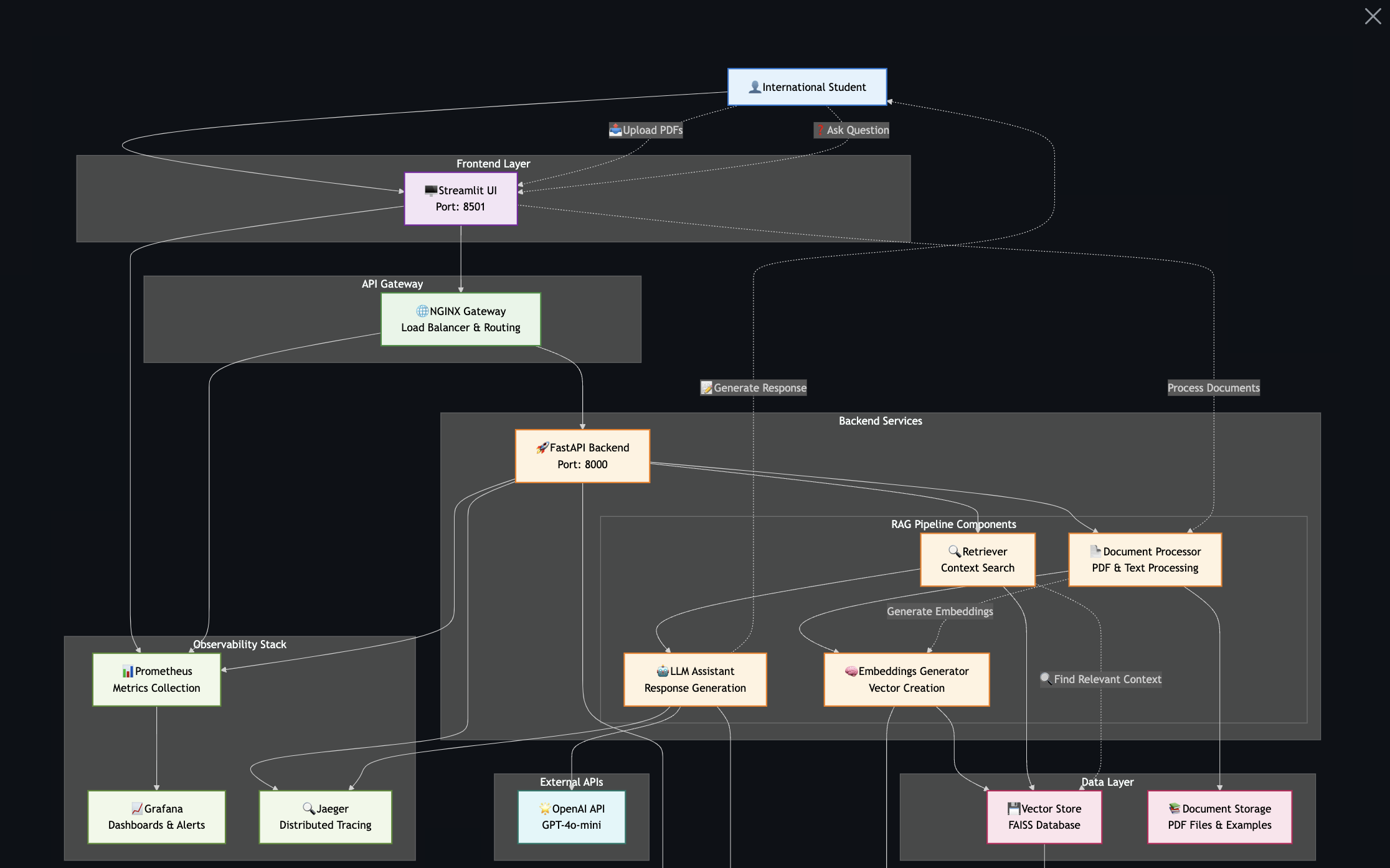

OPT-RAG is a sophisticated Retrieval-Augmented Generation (RAG) application designed to help international students navigate complex visa-related issues, OPT applications, and other immigration concerns. The system leverages advanced AI technology to provide accurate, context-aware responses by retrieving relevant information from official documentation and immigration policies. Built with a microservices architecture, the application features a FastAPI backend for document processing and query handling, a Streamlit frontend for user interaction, and a comprehensive monitoring stack for observability.

- StackPython, FastAPI, Qwen2.5-1.5B, Kubernetes, Docker, Streamlit

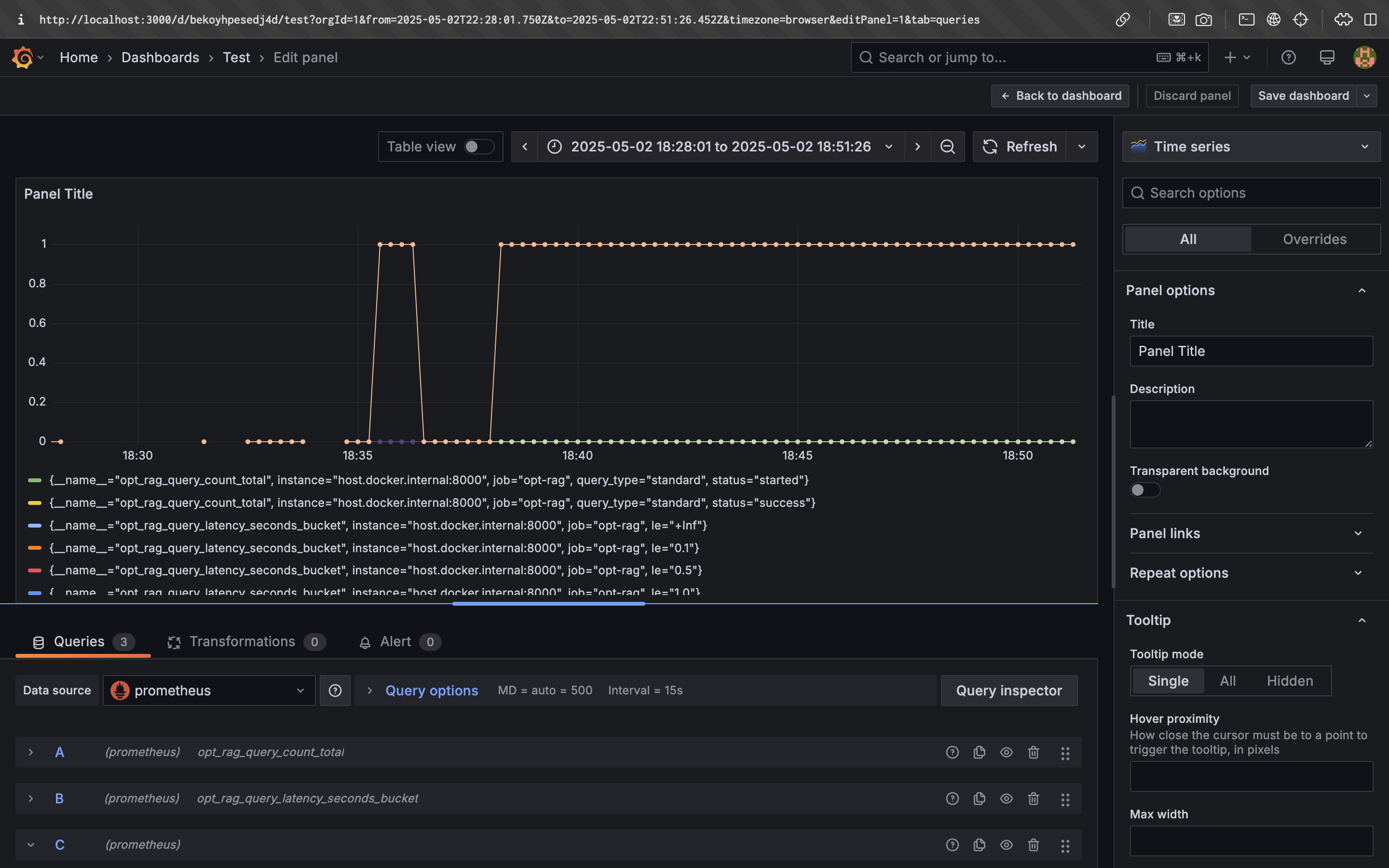

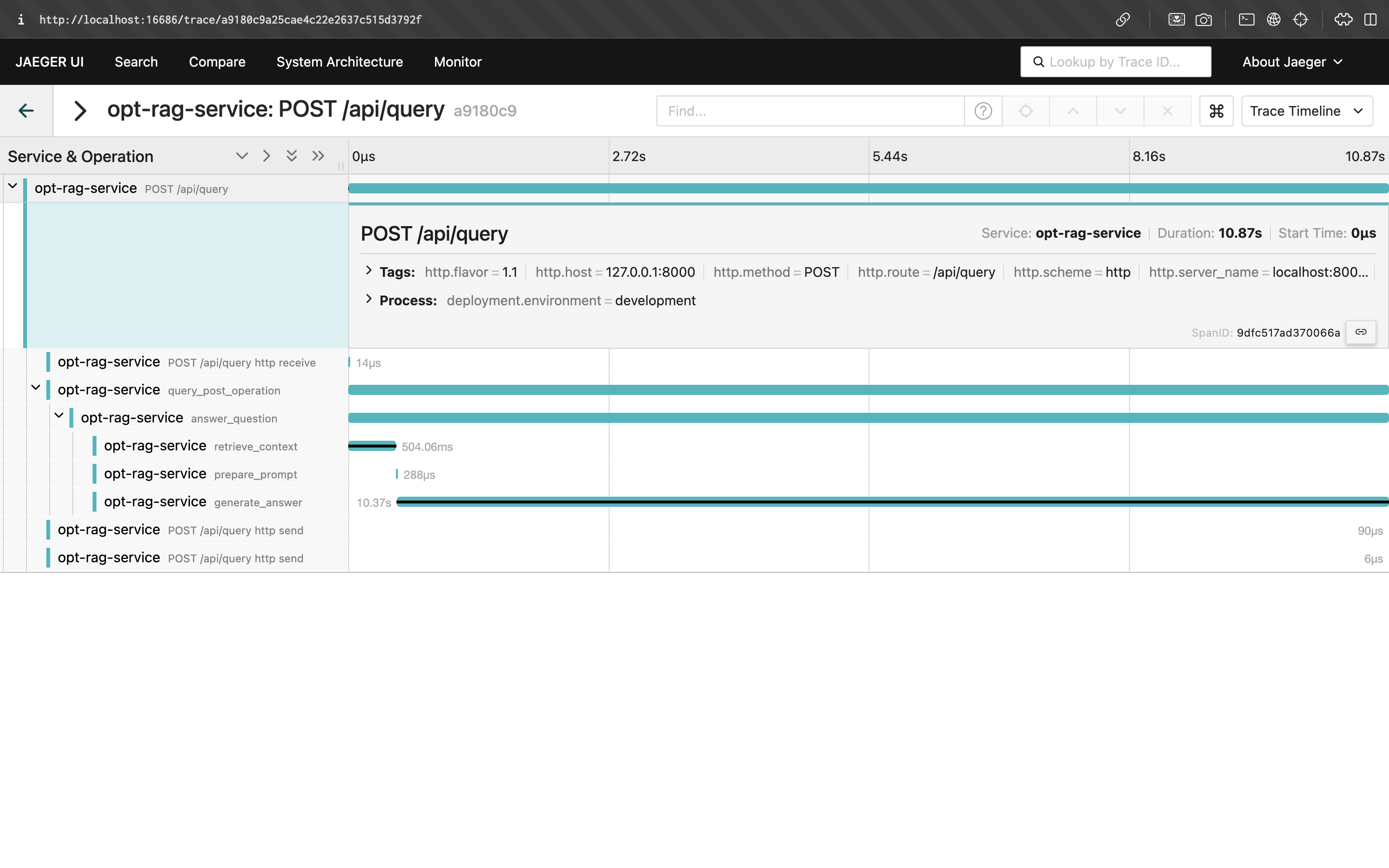

- ObservabilityPrometheus, Grafana, Jaeger

- Source CodeLLM-Agent-for-Visa

System Architecture

The application is deployed as a scalable microservice architecture using Kubernetes and Docker Compose. This design integrates a FastAPI backend for the core RAG logic, a Streamlit frontend for user interaction, and a FAISS vector store for efficient semantic search across visa documents. This modular setup allows for independent scaling and robust fault tolerance.

Key Features

The project includes several production-grade features that enhance its reliability and flexibility:

- Local LLM Inference: Utilizes a locally deployed Qwen2.5-1.5B model for all generation tasks, guaranteeing data privacy and eliminating reliance on third-party APIs.

- Dual-Mode LLM: Features a modular configuration to seamlessly switch between the local model and the OpenAI API, supporting both offline, privacy-focused deployments and rapid, API-driven development.

- Production-Grade Observability: Implements a comprehensive monitoring stack with Prometheus for metrics collection, Grafana for real-time dashboards, and Jaeger for distributed tracing across all microservices.